by Shawn Chang

Background

In recent years, memory safety has become a hot topic. However, when discussing “memory safety,” it is important to first clarify what exactly is being addressed and what the goals are. Are you focusing on using compiler-based static analysis (such as Clang Static Analyzer, rustc, etc.) to catch potential issues at compile time, or do you trust the compiler to successfully compile the code and then rely on runtime mechanisms (like garbage collection in Go or Java) to resolve all problems? Or are you solely concerned with the ultimate goal of security hardening, that is, saving the system from the attackers? The complexity of memory safety issues reflects the very nature of security: it is an interdisciplinary field that combines computer science with complexity theory, making it extraordinarily difficult to fully master. Therefore, the idea of completely eliminating all security vulnerabilities by rewriting existing software with one or several “memory-safe languages” is not a realistic solution.

A programming language might be designed with memory safety mechanisms in mind, such as garbage collection and array bounds checking, which depict an ideal state at the specification level. In reality, however, different implementers may adopt various strategies based on requirements and performance metrics. For example, although Lisp typically comes with a garbage collector, supports flexible data manipulation, and features a dynamic type system, this does not guarantee that all Lisp interpreters can completely eliminate memory safety issues. If, in pursuit of certain requirements or performance, some security checks are compromised, then vulnerabilities such as buffer overflows or illegal pointer accesses may still occur.

Similarly, C/C++ have long been regarded as “unsafe” languages because they allow programmers to directly manipulate memory and perform pointer arithmetic. However, with rigorous engineering methods, such as static analysis tools, strict code reviews, and runtime detection mechanisms. It is possible for C/C++ code in specific environments to reach an almost bug-free state. This article will explore some of the approaches employed by HardenedLinux over the past few decades in mitigating system complexity and enhancing memory safety. Overall, memory safety is not solely the responsibility of either the compiler or the runtime, with success requiring coordinated efforts across language design, tool support, and engineering practices to ultimately achieve a system that resists compromise. This article will not address topics such as mandatory access control, sandboxing, Linux kernel hardening or anything related to how to compromise a Lisp machine via DMA attack.

Choosing a GNU/Linux Distribution for Production Systems

The early members of HardenedLinux came from a few commercial Linux distribution background, so we know that building a GNU/Linux distribution isn’t difficult. However, creating a distribution that is maintained long-term and remains stable requires much higher standards. First and foremost, vital software and libraries must be managed by professional maintainers. When it comes to handling bug fixes and security vulnerabilities, maintainers do not simply hand off the problems to upstream sources, instead, they carefully weigh the options and, in most cases, choose a backports approach to preserve the original features and stability.

Based on these considerations, HardenedLinux’s best practices are primarily based on the Debian distribution, which, at the time, was the only community-driven with highly skills maintainers. Once the base distribution is selected, the primary challenge becomes addressing the quality and security risks inherent in applications and dependent libraries developed in “memory-unsafe” languages (such as C/C++). Before sanitizers and fuzzers were widely adopted, large-scale bug hunting usually required enormous manual effort. Nowadays, however, simply enabling sanitizers and running regular regression tests can effectively catch most issues during the QA process; furthermore, extensive fuzz testing can be introduced to more comprehensively capture potential vulnerabilities. Even at the GNU/Linux distribution level, QA can adopt this approach, with only a few components, such as compilers and the C runtime (glibc/musl/etc) can’t be sanitized.

In addition, we spent years developing a state-based Linux kernel fuzzer and, in 2020, contributed a coverage filter feature to the upstream Google Syzkaller. This might be one of the few significantly influential features emerging from the Asian open-source community. The technical approach that combines fuzz testing with sanitizers is a classic enhanced memory safety solution. Taking the Linux kernel as an example, different user scenarios rely on different kernel subsystems: storage servers depend more on the file system, while network servers focus more on the network protocol stack. Later, a state-model-based fuzz testing tool known as VaultFuzzer was developed—supporting stress testing of specific target systems. For instance, on a system equipped with a 32-core CPU and 64GB of RAM, it takes only about 20 hours to achieve approximately 72% code coverage for the main parts of the network protocol implementation. Of course, some might argue that the remaining 28% of the code still poses memory safety risks. In response, our approach is to adopt an overall security architecture design, such as implementing runtime mitigation measures at the kernel level, to further bridge this gap. We will discuss this issue shortly.

From Exploitability to Memory Safety Solutions

The full lifecycle of a vulnerability typically includes the following stages:

-

Identifying the bug and assessing whether it can be exploited

-

If deemed exploitable, confirming it as a vulnerability and writing a PoC (Proof of Concept)

-

Adapting the PoC into a stable exploit

-

Digital arms dealers integrating it into a weaponized framework

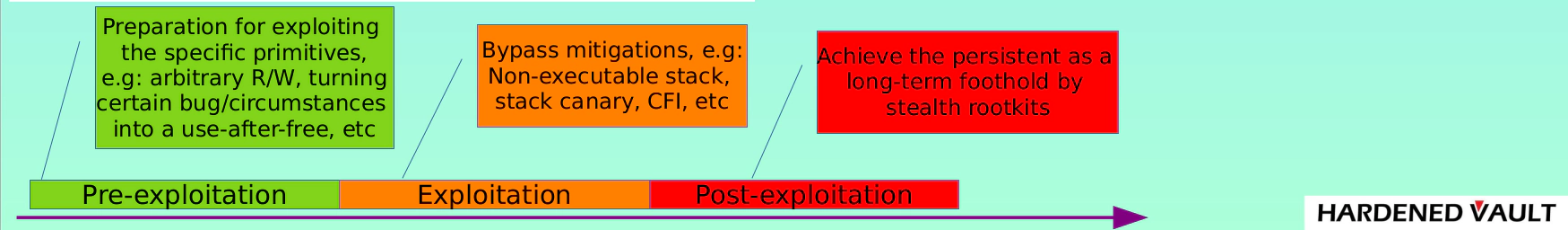

During the QA engineer’s bug hunting process, the tools and workflows used (such as fuzzers + sanitizers) are quite similar to those employed by security researchers. From the perspective of a security researcher, the process of exploiting a vulnerability can generally be divided into three stages:

-

Pre-exploitation phase (bug triggering)

-

Exploitation phase

-

Post-exploitation phase (e.g., implanting a rootkit)

The bug triggering phase is viewed as the pre-exploitation stage. For free and open-source software projects, issues in this phase should ideally be handled by the QA process. So, aside from the commonly used sanitizers and fuzzers, what other methods can be employed during this phase to detect vulnerabilities? This is where the value of our exciting open-source project Fil-C comes into play.

Fil-C is a memory safety solution for C/C++ developed by Epic Games, employing a rather radical approach. Fil-C customizes the Clang/LLVM compiler and makes improvements to related compiler libraries to better support various transformation passes and the C runtime (such as musl); at the same time, some libraries and applications require minimal code adaptations. The following is a series of test cases:

| Bug type | Sanitizers | Fil-C | Clang bounds-safety |

|---|---|---|---|

| 0-out-of-bounds-access.c | YES (ASAN) | NO | NO |

| 2-out-of-bounds-access.c | YES (ASAN) | YES | NO |

| 3-out-of-bounds-in-bounds.c | YES (ASAN) | YES | NO |

| 1-overflowing-out-of-bounds.c | NO (ASAN) | YES | N/A |

| 4-bad-syscall.c | NO (ASAN) | YES | N/A |

| 5-type-confusion.c | NO (ASAN) | YES | N/A |

| 6-use-after-free.c | YES (ASAN) | YES | N/A |

| 7-pointer-races.c | YES (ASAN/TSAN) | Partially | N/A |

| 8-data-races.c | YES (TSAN) | NO | N/A |

By comparing the detection capabilities of Fil-C with those of traditional sanitizers, we can see that although Fil-C cannot resolve all issues, it has successfully achieved memory-safe C/C++ from an exploitability standpoint, rendering common vulnerabilities harmless. Interestingly, Fil-C not only helps you identify problems, but you can even compile some critical components with it to serve as an independent runtime (perhaps we’ll see this reflected in the next generation of Epic Games’ titles?). Of course, Fil-C’s performance and engineering maturity are not yet at the point of general adoption, but it represents a very promising direction.

Exploit vs. Mitigation

Since we have discussed the bug hunting process, it is equally important to delve into mitigation. If relying on a sanitizer+fuzzer model cannot uncover all bugs, and the target program cannot adopt a memory-safe C/C++ solution like Fil-C due to performance considerations, then one can consider supplementing the system with a series of mitigation techniques provided by the compiler and the C runtime library.

These mitigation techniques include implementations at the hardware level (such as NX, CET, BTI, etc.), as well as a large number of software implementations. Do not underestimate these software-level protection measures: at critical moments, they can play a vital role. Although Attacking the CORE in 2007 shifted its focus to the kernel, countless cases demonstrate that even user-space programs require them. A recent write-up by ret2 described how, in the Pwn2Own Ireland 2024 competition, the exploitation construction for an RCE remote exploit against the Synology DiskStation DS1823xs+ was carried out. Had Synology’s security team simply enabled FULL RELRO and CFI, the defense would have been significantly improved, greatly increasing the difficulty of exploitation, and the outcome of the story might have been entirely different. Here is our commonly referenced list of mitigations:

| Bug type | Sanitizers |

|---|---|

| Stack canary | -fstack-protector-* |

| Shadow stack | -mshstk (GCC), -fsanitize=safe-stack (CLANG) |

| Fortified source | -D_FORTIFY_SOURCE=3 |

| Full ASLR with PIE | -pie |

| Control flow integrity | x86: -fcf-protection=, arm64: -mbranch-protection=, SW |

| Relocation only | -Wl,-z,now |

| Bounds check | -XClang -fexperimental-bounds-safety (CLANG) |

Call for Action

In recent years, during discussions with open source developers and even security researchers, I have repeatedly heard the objection that although Google attempts to preemptively find vulnerabilities through bug hunting, many issues still slip through. Given this, shouldn’t we simply rewrite our software and libraries using memory-safe languages? At first glance, this notion seems to have some merit, but in reality, it contradicts other facts. A few months ago, while talking with a security engineer from Southern Europe, he complained that an open source cryptography component related to hardware security modules (HSM) still had a “segmentation fault”. Puzzled, I asked whether the debug/test builds hadn’t enabled sanitizers. Unfortunately, we confirmed that this library indeed did not integrate a sanitizer. Please do not jump to conclusions: this library is not OpenSSL. OpenSSL has long integrated sanitizers and has ensured that all regression tests pass.

Returning to the argument, “if even Google cannot resolve these issues, then we must rewrite everything,” it becomes problematic. From the perspective of 0ldsk00l hackers and cypherpunks, individuals should have self-sovereignty. Should we simply ignore these issues just because Google hasn’t fixed every problem? Hell no. You need to fix the shits for yourself and that may the benefit of the entire community as well.

Therefore, I call on all free and open source software developers: please at least enable sanitizers in your debug and test builds. If everyone does so, this will be the lowest cost approach to making the world a safer place.

Rewriting Software

Rewriting software and libraries using memory-safe languages is an expensive endeavor. If you have thoroughly considered this approach and decide to proceed, please consider rewriting them in Lisp/Scheme.

Reference

CC Bounds Checking Example https://williambader.com/bounds/example.html

The Fil-C Manifesto: Garbage In, Memory Safety Out! https://github.com/pizlonator/llvm-project-deluge/blob/deluge/Manifesto.md

https://github.com/pizlonator/llvm-project-deluge/blob/deluge/invisicaps_by_example.md

Exploiting the Synology DiskStation with Null-byte Writes: Achieving remote code execution as root on the Synology DS1823xs+ NAS https://blog.ret2.io/2025/04/23/pwn2own-soho-2024-diskstation/

memory-safety-coverage-test https://github.com/hardenedlinux/memory-safety-coverage-test

Technical analysis of syzkaller based fuzzers: It’s not about VaultFuzzer! https://hardenedvault.net/blog/2022-08-07-state-based-fuzzer-update/